Using AI Agents to Automate EU AI Act and GDPR Compliance

AI compliance agents automate EU AI Act and GDPR tasks—tracking AI systems, drafting Annex IV docs, managing oversight, and streamlining privacy workflows without slowing delivery.

Compliance teams didn’t suddenly forget how to do their jobs; the work simply exploded. The EU AI Act adds product-safety-style governance on top of existing privacy rules, while engineering ships models faster than legal can review them. That’s the gap AI compliance agents are meant to close: software that continuously inventories systems, drafts the right documents, nudges teams to collect evidence, and keeps your files audit-ready—without turning every release into a standstill.

What an “AI compliance agent” actually is (and isn’t)

Think of an agent as a supervised coworker. It connects to your code repos, model registry, cloud accounts, tickets, and logs; reads policies and the relevant regulatory text; and then proposes actions and artifacts you approve. It doesn’t replace counsel or the accountable owner. It standardizes the boring parts—classification, document scaffolding, reminders, and checks—so humans spend time on real judgment calls.

Where agents help most with the EU AI Act

System inventory & classification. The agent watches your repos, MLOps registry, and cloud to auto-discover AI features, then proposes the role (provider or deployer), intended purpose, and preliminary risk tier. You approve the record, and the system becomes trackable from day one.

Annex IV technical documentation. Instead of starting from a blank page, the agent assembles a live technical file: it pulls model cards, training/validation details, evaluation results, robustness and cybersecurity notes, change logs, and usage instructions. As code or datasets change, it suggests doc updates and links the exact evidence.

Risk management & data governance. It prompts teams to record hazard analyses, fairness tests, and mitigation decisions. If your telemetry shows drift or performance degradation, it files an issue, proposes a re-validation step, and reminds owners to update the technical file.

Human oversight you can prove. Oversight only counts if it’s real. The agent helps define intervention points, capture who approved what and when, and keep an audit trail of overrides—so “a human in the loop” isn’t just a sentence in a policy.

Transparency and user information. It maintains approved disclosure language (chatbot notices, synthetic-media labels, instructions for use) and checks that releases include them in the right surfaces. If a product team forgets to add a disclosure, the agent flags it during review.

Conformity assessment readiness. For high-risk systems, the agent tracks what evidence is missing for your quality management system, schedules pre-assessment dry runs, and produces a tidy index so you’re not hunting for screenshots and spreadsheets the week before launch.

Post-market monitoring & incidents. Once in production, the agent watches agreed signals—accuracy, robustness, safety—and opens an incident workflow when thresholds are crossed. It collects facts, timelines, and remediation notes while you work, so reporting isn’t a panicked reconstruction.

Where agents help with GDPR (and CCPA)

Records of processing and data mapping. As engineers add features, the agent proposes updates to your records of processing (systems, purposes, categories, retention). It keeps data-flow diagrams fresh by reading infrastructure metadata rather than relying on annual interviews.

DPIAs, TIAs, and risk notes. For features that touch sensitive data or automated decision-making, the agent pre-fills assessments with what it already knows—purposes, lawful bases you typically use, safeguards in place—and routes the draft to privacy for review.

Data subject request workflow. It triages incoming requests, locates data across systems, drafts a response for human approval, and logs deadlines, exceptions, and proof of fulfillment.

Vendor and GPAI dependencies. If you rely on third-party models or APIs, the agent tracks versions, model cards, security attestations, change notices, and usage restrictions. When an upstream model updates, it suggests a mini-re-assessment and doc refresh.

Guardrails that make this safe

Two disciplines matter more than clever prompts: approval workflows and audit logs. Every significant action—classification decisions, policy mappings, doc changes—should have a named owner, timestamp, and diff. Pair that with least-privilege access (read-only where possible, scoped write access where necessary) and separation of duties between engineering, privacy, and compliance. The result is speed without mystery.

How to roll it out (30/60/90)

First 30 days: Pick one AI product as a pilot. Connect read-only to your repos, model registry, and logging. Let the agent build the inventory entry, propose risk classification, and scaffold the Annex IV technical file. Keep approvals tight and review every suggestion.

Days 31–60: Expand to two or three more products, integrate with your ticketing system, and turn on post-market monitoring suggestions (issues auto-opened with owners and SLAs). Establish your disclosure library and standard oversight patterns.

Days 61–90: Switch from reactive to routine. Schedule pre-release checks, quarterly re-validations, and vendor/GPAI dependency reviews. Start measuring cycle time to produce or update documentation and incident response readiness.

What to measure

Pick a small set of KPIs you can improve month over month: time to create/update a technical file, % of AI systems with complete inventory records, % of releases with required disclosures on first pass, mean time to fulfill data subject requests, and time from detection to documented closure for post-market issues. Improvement here is the clearest proof the program is working.

Where WALLD fits

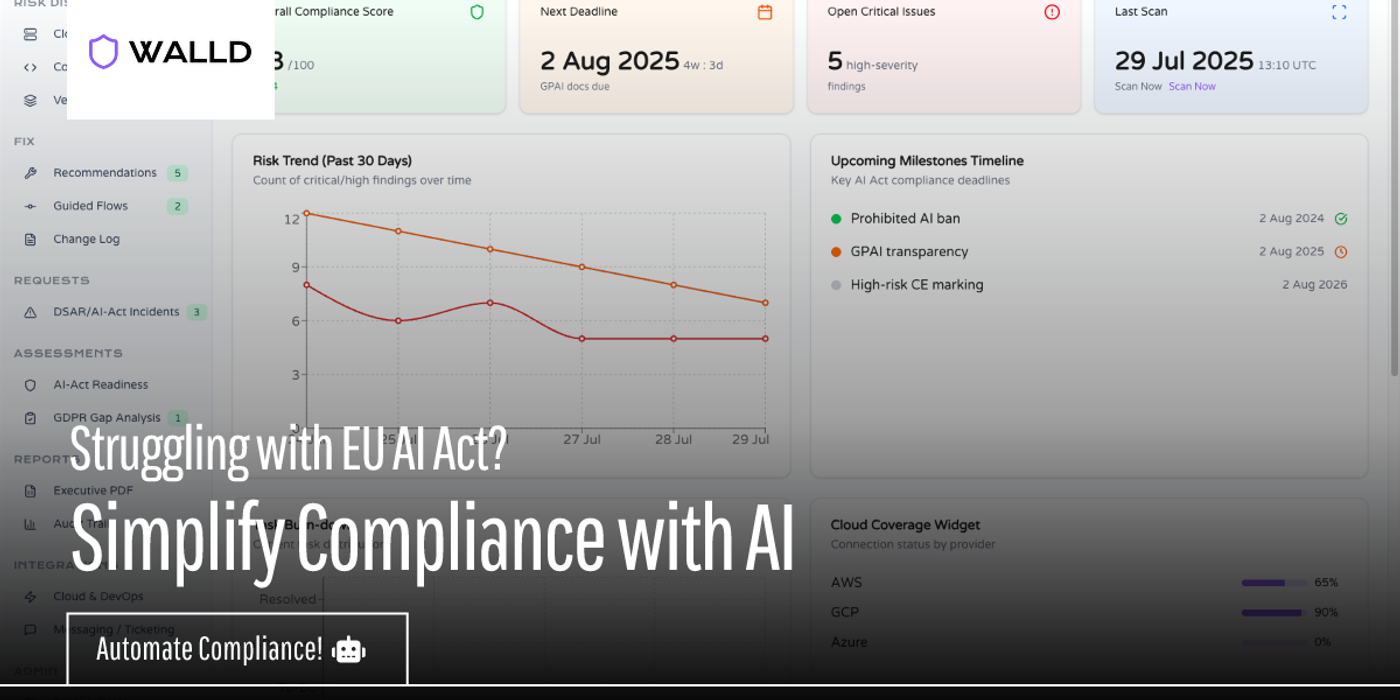

WALLD is built as this supervised coworker. It discovers AI systems, proposes EU AI Act classifications, drafts Annex IV-ready documentation with linked evidence, maintains disclosure libraries, orchestrates oversight and post-market checks, and keeps privacy artifacts in sync—so your engineers keep shipping and your compliance files stay audit-ready.

This article provides general information and is not legal advice. For specific interpretations, consult qualified counsel.